Local Shannon Entropy Measure with Statistical Tests for Image Randomness

Introduction

In this paper we propose a new image randomness measure using Shannon entropy over local image blocks. The proposed local Shannon entropy measure overcomes several weaknesses of the conventional global Shannon entropy measure, including unfair randomness comparisons between images of different sizes, failure to discern image randomness before and after image shuffling, and possible inaccurate scores for synthesized images. Statistical tests pertinent to this new measure are also derived. This new measure is therefore both quantitative and qualitative. The parameters in the local Shannon entropy measure are further optimized for a better capture of local image randomness. The estimated statistics and observed distribution from 50,000 experiments match the theoretical ones. Finally, two examples are given, applying the proposed measure to image randomness among shuffled images and encrypted images. Both examples show that the proposed method is more effective and more accurate than the global Shannon entropy measure.

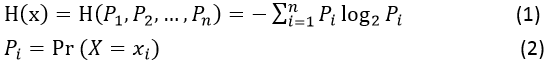

Shannon entropy measure and properties

Shannon entropy, named after Claude Shannon, was first proposed in 1948. Since then, Shannon entropy has been widely used in the information sciences. Shannon entropy is a measure of the uncertainty associated with a random variable. Specifically, Shannon entropy quantifies the expected value of the information contained in a message. The Shannon entropy of a random variable X can be defined as in Eq. (1), where pi is defined in Eq. (2) with xi indicating the i-th possible value of X out of n symbols, and pi denoting the possibility of X = xi.

|

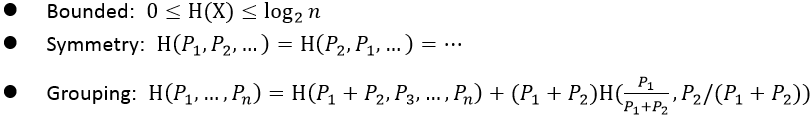

Shannon Entropy attains, but is not limited to, the following properties:

|

In the context of digital images, an M×N image X can be interpreted as a sample from an L-intensity-scale source that emitted it. As a result, we can model the source symbol probabilities using the histogram of the image X (the observed image) and generate an estimate of the source entropy.

Local Shannon entropy measure and statistical tests

Local Shannon entropy measure

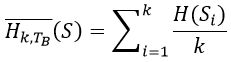

We define the (k,𝑇𝐵)-local Shannon entropy measure with respect to local image blocks using the following method:

Step 1: Randomly select non-overlapping image blocks 𝑆1,𝑆2,…,𝑆k with 𝑇𝐵 pixels within a test image S of L intensity scales

Step 2. For all iϵ{1,2,…,k} compute Shannon entropy

Step 3. Calculate the sample mean of Shannon entropy over these k image blocks 𝑆1,𝑆2,…,𝑆k via

|

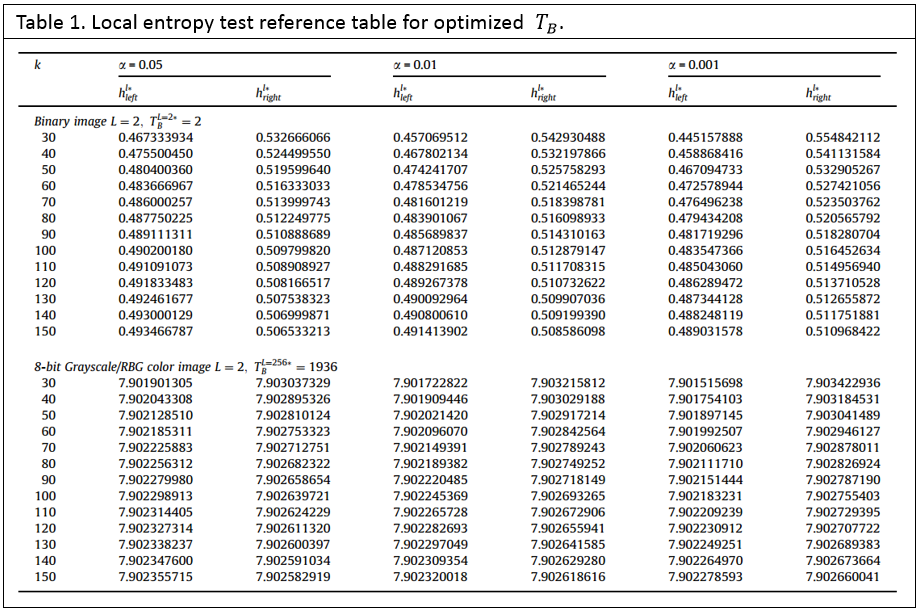

Statistical tests

Table 1 provides acceptance intervals of (k,𝑇𝐵)-local entropy tests under various significance levels with respect to parameter k.

|

Why local and not global?

Because the local Shannon entropy measures image randomness by computing the sample mean of Shannon entropy over a number of non-overlapping and randomly selected image blocks, it is able to overcome some known weaknesses of the global Shannon entropy:

1. Inaccuracy: The global Shannon entropy sometimes fails to measure the true randomness of an image. Unlike global Shannon entropy, the (k,𝑇𝐵) local Shannon entropy is able to capture local image block randomness a measure that might not be correctly reflected in the global Shannon entropy score.

2. Inconsistency: The term ‘global’ is commonly inconsistent for images with various sizes, making the global Shannon entropy unsuitable as a universal measure. However, the (k,𝑇𝐵)-local Shannon entropy is able to measure the image randomness using the same set of parameter regardless of the various sizes of test images and thus provides a relatively fair comparison for image randomness among multiple images.

3. Low efficiency: The global Shannon entropy measure requires the pixel information of an entire image, which is costly when the test image is large. However the local entropy measure requires only a portion of the total pixel information.